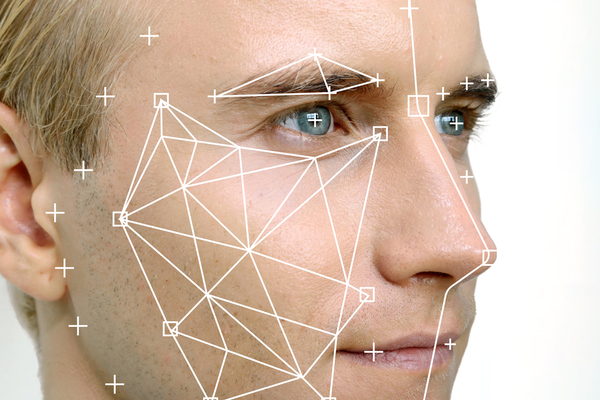

It’s easy to be alarmist about facial recognition, but at the end of the day, it’s a simple matching system. The algorithm establishes a set of features and looks for matches, just like a search engine or a speech-to-text system. The problems come with how people use that capability — whether it’s commercial marketing or, more controversially, police surveillance.

Those problems get particularly bad if you don’t use the system the way it’s designed. A recent Georgetown study found that NYPD officers purposely manipulate images before they input them into the system, often pasting in stock eyes or mouths in order to bring an image in line with the system’s standards. In one particularly egregious case, officers actually used a picture of actor Woody Harrelson, based on an eyewitness who said that the perpetrator looked like Harrelson.

Facial recognition systems were never built to be used this way, and the result is predictable enough: high error rates. If you trust police, that might be worthwhile — a few more loose faces to sift through to generate leads — but if you don’t, the picture is much more bleak. It’s rare for a person to be arrested solely on the basis of a facial recognition match, but a false match is likely to lead to a police stop. In legal terms, that lets the algorithm stand in for reasonable suspicion, which is the legal standard for briefly detaining a person to investigate further.

But if an algorithm is used incorrectly, police can generate that reasonable suspicion out of almost anything. And because the sensitivity of those algorithms is easy to adjust (and those adjustments are hard to do), it’s easy to tweak a given face into a match. That’s a hard problem to solve, particularly if you don’t trust the people using the tools. And for critics of facial recognition, it makes the entire system too dangerous to trust.

—

Photo Credit: Zapp2Photo / Shutterstock.com